LLM capt bots are one of the coolest and most useful technologies to come out – an encyclopedia and AI assistant in conversational form at your fingertips.

There’s the standard ChatGPT, but what if you want to run things on your own computer? Opensource and free to use with little restriction?

Good news, you have options! 🙂

In this post I’ll go over GPT4ALL for running things locally.

– GPT4ALL [MIT License] (https://github.com/nomic-ai/gpt4all)

“GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU.

Note that your CPU needs to support AVX or AVX2 instructions.”

You can also explore this other one for more flexibility, but the setup could be more involved.

– text-generation-webui by oobabooga [AGPL-3.0 license] (https://github.com/oobabooga/text-generation-webui)

and there is

– koboldcpp [AGPL-3.0 license] (https://github.com/LostRuins/koboldcpp)

GPT4All

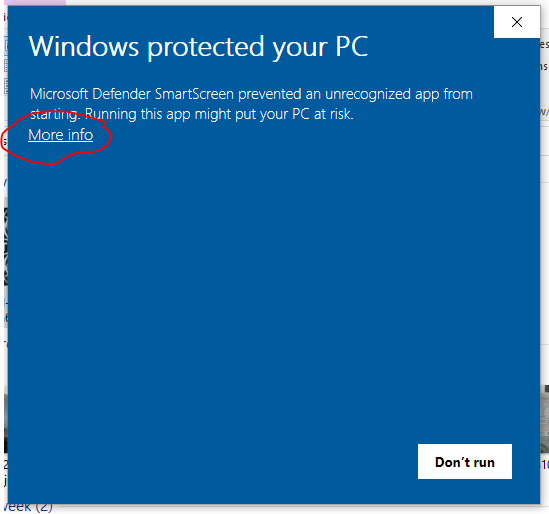

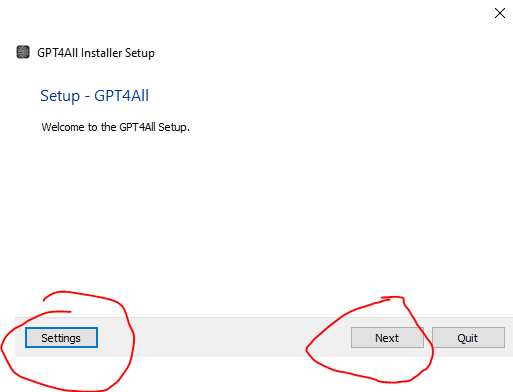

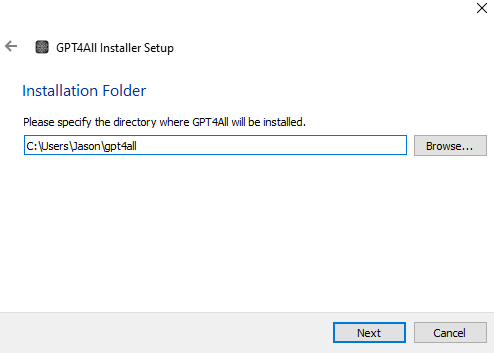

GPT for all is great because it comes with an installer for Windows, Mac, and Linux (Ubuntu)

You could go into settings->Local cache tab if you care about what disk things are stored on for potentially large files / faster drive etc.

Etc. Etc.

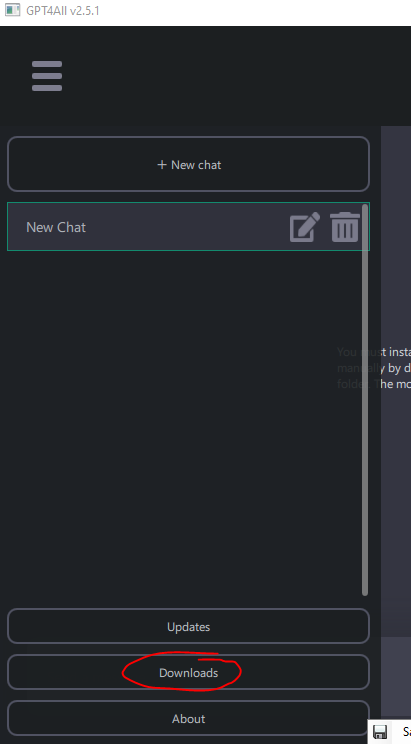

And a nice thing is that it will prompt you to update if available over time when you start it up.

When you run it for the first time it should automatically bring up the downloads section.

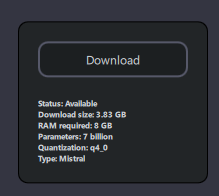

The GPT4All Download information is great as it recommends models, and gives details

Setting the download path to a large drive is a good idea – ideally a large fast SSD if available 🙂

And a path that is easy to find if you want to independently download files from Huggingface etc.

You can also download .gguf format models from Huggingface that are supported and use them.

Go to https://huggingface.co/TheBloke/CodeLlama-7B-Python-GGUF and see a list of clients / libraries available.

Next post I’ll demo some opensource LLMs and see how they do on some coding and explanation tasks.

The follow up piece: Working with local opensource LLMs